Getting Started with VSCode and GitHub

Step 1: Download VSCode and install it.

The settings I personally choose...

Step 2: Configure PowerShell syntax highlighting and VSIcons

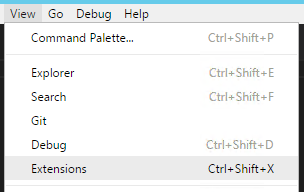

Click View -> Extensions

Click Install next to vscode-icons, this isn't required it is just a really nice touch IMO...

After you click install don't click reload, search for powershell and click Install next to PowerShell (the StackOverflow extension is awesome too!), click Reload when it is done...

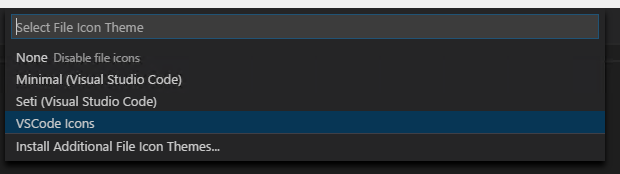

Click File -> Preferences -> File Icon Theme...

Select VSCode Icons from the list...

Step 3: Download and Install Git SCM

For most users simply selecting the default options for the installation is sufficient, there are a lot of questions, but for most, the default it fine...

Step 4: Basic Git Configuration

git config --global user.name "Bob Dole"

git config --global user.email "user@email.com"

Step 5: Create and Pull a GitHub Repository

When you log into GitHub, in the upper right corner you will see a + symbol, click that and choose "New Repository"

Enter a Repository Name, in this case I chose PowerShell-Scripts, description is optional

Launch Git BASH (this was installed as part of Git) and navigate to the local location you want the repository to reside at.

Once there type the following:

git clone <https address of your repository>.git

You will be prompted to log in to GitHub...

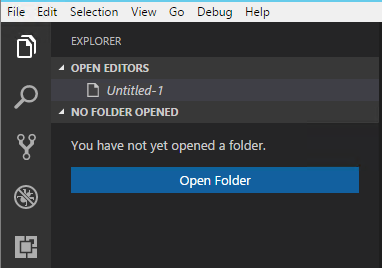

Once you log in you now want to open the folder you just cloned the repository to (the way we did it there should be a sub folder called "PowerShell-Scripts" located at the path you were in when you ran the git clone. Click the Explorer tab on the left hand Activity Bar.

Browse to the folder you cloned the repository to.

Once you have opened this folder click File -> New File

Once the file is open we need to tell VSCode that this is a PowerShell file, if you open a .ps1 file it automatically knows, in the case of a new file we need to tell it, so hit CTRL+SHIFT+P and type "change lang" and hit enter and type "ps" and hit enter, now VSCode knows which Syntax and IntelliSense to use.

You will also see that upon doing that it will open a PowerShell terminal pane below your script pane.

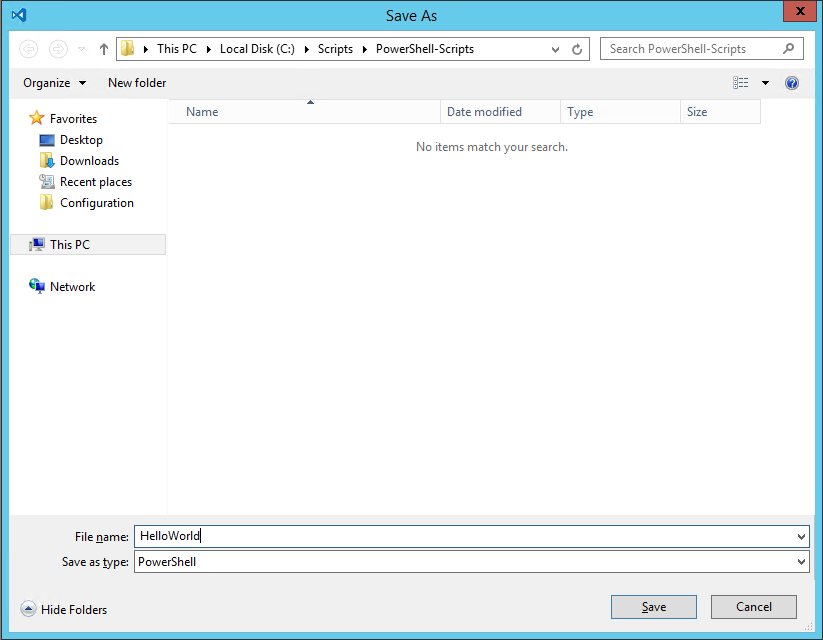

After you type some example code click File -> Save (or hit CTRL+S) and save your file t the folder your repository exists in.

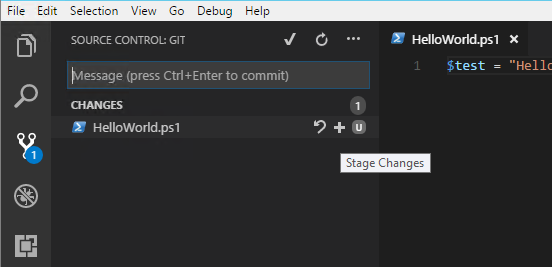

Click on Source Control in the Activity Bar and you shoul dnow see your file there, when you hover over it you should see a + symbol, click that, this stageds your changes you just made.

You should see the "U" change to an "A". Click the Check Mark at the top of the pane.

It will ask you to enter a commit comment, this should be a succinct description of the changes you are committing, in many cases your first commit comment will be "initial commit". When you view the files on GitHub you can see the commit comment associated with the last commit that included that individual file/folder.

Once your commit is complete you need to "Push" your changes up to Git, to do this, on the Source Control pane click the Ellipsis and select "Push", barring any issues the changes will now be pushed up to GitHub available for cloning and syncing with new users or your other development locations.

There you go, changes are now secure, feel free to break your incredibly complex Hello World and you will be able to recover the last working version and collaborate with others on long term scripts requiring multiple developers.

DSC: Script Resource GetScript

If you look on the TechNet page for the Script Resource you will see

GetScript = { <# This must return a hash table #> }

Which is technically speaking, true...usually...right up until the point you try to run Get-DscConfiguration on a machine, in which case it will get to that script resource and die saying:

The PowerShell provider returned results that are not valid from Get-TargetResource. The <keyname> key is not a valid property in the corresponding provider schema file. The results from Get-TargetResource must be in a Hashtable format. The keys in the Hashtable must be the same as the properties in the corresponding provider schema file.

The consensus around the web is that the error is saying you have to return a hashtable with keys that match the properties of the schema, so in this case the schema for the Script resource is:

#pragma namespace("\\\\.\\root\\microsoft\\windows\\DesiredStateConfiguration")

[ClassVersion("1.0.0"),FriendlyName("Script")]

class MSFT_ScriptResource : OMI_BaseResource

{

[Key] string GetScript;

[Key] string SetScript;

[Key] string TestScript;

[write,EmbeddedInstance("MSFT_Credential")] string Credential;

[Read] string Result;

};

Which means in order for your Script resource to be compliant you need to return:

GetScript = {return @{ Result = ();GetScript=$GetScript;TestScript=$TestScript;SetScript=$SetScript}}

But when you think about it, this doesn't make a lot of sense. In every other resource I can think of it makes absolute sense, because the parameters in the schema determine the status of the resource you want to control, not how you control it and how you test for it.

It would be like Get-TargetResource for the Registry resource not returning the information about the key, its value, etc. but rather returning that AND returning the entire contents of MSFT_RegistryResource.psm1 which would make literally no sense. We don't care HOW you check or HOW you set, and returning a Get-Script with the contents of Get-Script is...batty...we care about the resource being controlled.

Luckily, the statement that "the keys need to match the parameters" can be interpreted to mean you need to match ALL of them, or it can be interpreted to mean "they just need to exist" and in the case of the Script resource Result does exist. And that is what we need to return.

GetScript = {return @{Result=''}}

They really need to update the TechNet page to say "GetScript needs to return a hash table with at least one key matching a parameter in the schema for the resource".

No need to return potentially hundreds of lines of code in some M.C. Escher-like construct containing itself. Just stick to returning information about the resource you are controlling. If your script sets the contents of a file, return the contents of that file. Not the contents of the file AND the script you used to set it AND the script you used to test it.

DSC: Registry Resource Binary Comparison Bug

Ever used DSC to set a binary registry value only to find out no matter how many times it sets it, it always thinks the value is incorrect?

The problem lies in MSFT_RegistryResource.psm1, @Ln926

$Data | % {$retString += [String]::Format("{0:x}", $_)}

Should be:

$Data | % {$retString += [String]::Format("{0:x2}", $_)}

Because it isn't, the value data, in this case:

ValueData = @("8232c580d332674f9cab5df8c206fcd8")

Which is 82 32 c5 80 d3 32 67 4f 9c ab 5d f8 c2 06 fc d8 in HEX dies because that 06 towards the end gets turned into a 6, even if that were valid hex it wouldn't match the input and thus the Test-DSCResource fails every single time.

Bundle DSC Waves for Pull Server Distribution

This assumes you have WinRar installed to the default path, this will also delete the source files after it creates the zip files.

After running this script copy the resulting files to the DSC server in the following location: "C:\Program Files\WindowsPowerShell\DscService\Modules"

$modpath = "-path to dsc wave-"

$output = "-path to save wave to-"

[regex]$reg = "([0-9\.]{3,12})"

if((Test-Path $output) -ne $true){ New-Item -Path $output -ItemType Directory -Force }

foreach($module in (Get-ChildItem -Path $modpath)) {

$psd1 = ($module.FullName+"\"+$module+".psd1")

$content = Get-Content $psd1

foreach($line in $content) {

if($line.Contains("ModuleVersion")) {

$outpath = $output+"\"+$module.Name+"_"+($reg.Match($line).Captures)

Write-Host ""

if(Test-Path -Path $outpath) {

Copy-Item -Path $module.FullName -Destination $outpath -Recurse

}else{

New-Item -Path $outpath -ItemType Directory -Force

Copy-Item -Path $module.FullName -Destination $outpath -Recurse

}

& "C:\Program Files\WinRar\winrar.exe" a -afzip -df -ep1 ($outpath+".zip") $outpath

}

}

}

Start-Sleep -Seconds 1

New-DscCheckSum -Path $output

PowerShell DSC: Remote Monitoring Configuration Propagation

So if you are like me you are not really interested in crossing your fingers and hoping your servers are working right. Which is why it is uniquely frustrating that DSC does not have anything resembling a dashboard (not a complaint really, it is early days, but in practical application not knowing something went down is...not really an option unless you like being sloppy).

The way I build my servers is, I have an XML file with a list of servers, their role, and their role GUID. Baked into the master image is a simple bootstrap script that goes and gets the build script, since I'm using DSC the "build" script doesn't really build much, itself mostly just bootstrapping the DSC process. The first script to run is:

$nodeloc = "\\dscserver\DSC\Nodes\nodes.xml"

# Get node information.

try {

[xml]$nodes = Get-Content -Path $nodeloc -ErrorAction 'Stop'

$role = $nodes.hostname.$env:COMPUTERNAME.role

}

catch{ Write-Host "Could not find matching node, exiting.";Break }

# Set correct build script location.

switch($role) {

"XenAppPKG" { $scriptloc = "\\dscserver\DSC\Scripts\pkgbuild.ps1" }

"XenAppQA" { $scriptloc = "\\dscserver\DSC\Scripts\qabuild.ps1" }

"XenAppProd" { $scriptloc = "\\dscserver\DSC\Scripts\prodbuild.ps1" }

}

Write-Host "Script location set to:"$scriptloc

if((Test-Path -Path "C:\scripts") -ne $true){ New-Item -Path "C:\scripts" -ItemType Directory -Force -ErrorAction 'Stop' }

Write-Host "Checking build script availability..."

while((Test-Path -Path $scriptloc) -ne $true){ Start-Sleep -Seconds 15 }

Write-Host "Fetching build script..."

while((Test-Path -Path "C:\scripts\build.ps1") -ne $true){ Copy-Item -Path $scriptloc -Destination "C:\scripts\build.ps1" -ErrorAction 'SilentlyContinue' }

Write-Host "Executing build script..."

& C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -file "C:\scripts\build.ps1"

The information it looks for in the nodes.xml file looks like this:

<hostname> <A01 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <A02 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <A03 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <A04 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B01 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B02 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B03 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B04 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> </hostname>

I wont go any further into this as most of it has already been covered here before, the main gist of this is, my solution to this problem relies on the fact that I use the XML file to provision DSC on these machines.

There are a couple modifications I need to make to my DSC config to enable tracking, note the first item are only there so I can override the GUID from the CMDLine if I want. In reality you could just set the ValueData to ([GUID]::NewGUID()).ToString() and be fine.

The first bit of code take place before I start my Configuration block, the actual Registry resource is the very last resource in the Configuration block (less chance of false-positives due to an error mid-config).

param (

[string]$guid = ([GUID]::NewGuid()).ToString()

)

...

Registry verGUID {

Ensure = "Present"

Key = "HKLM:\SOFTWARE\PostBuild"

ValueName = "verGuid"

ValueData = $verGUID

ValueType = "String"

}

From here we get to the important part:

[regex]$node = '(\[Registry\]verGUID[A-Za-z0-9\";\r\n\s=:\\ \-\.\{]*)'

[regex]$guid = '([a-z0-9\-]{36})'

$path = "\\dscserver\Configuration\"

$pkg = @()

$qa = @()

$prod = @()

$watch = @{}

$complete = @{}

[xml]$nodes = (Get-Content "\\dscserver\DSC\Nodes\nodes.xml")

# Find a list of machine names and role guids.

foreach($child in $nodes.hostname.ChildNodes) {

switch($child.Role)

{

"XenAppPKG" { $pkg += $child.Name;$pkgGuid = $child.guid }

"XenAppQA" { $qa += $child.Name;$qaGuid = $child.guid }

"XenAppProd" { $prod += $child.Name;$prodGuid = $child.guid }

}

}

# Convert DSC GUID's to latest verGUID.

$pkgGuid = $guid.Match(($node.Match((Get-Content -Path ($path+$pkgGuid+".mof")))).Captures.Value).Captures.Value

$qaGuid = $guid.Match(($node.Match((Get-Content -Path ($path+$qaGuid+".mof")))).Captures.Value).Captures.Value

$prodGuid = $guid.Match(($node.Match((Get-Content -Path ($path+$prodGuid+".mof")))).Captures.Value).Captures.Value

# See if credentials exist in this session.

if($creds -eq $null){ $creds = (Get-Credential) }

# Make an initial pass, determine configured/incomplete servers.

if($pkg.Count -gt 0 -and $pkgGuid.Length -eq 36) {

foreach($server in $pkg) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -ne $pkgGuid) {

Write-Host ("Server {0} does not appear to be configured, adding to watchlist." -f $server)

$watch[$server] = $pkgGuid

}else{

Write-Host ("Server {0} appears to be configured. Adding to completed list." -f $server)

$complete[$server] = $true

}

}

}else{

Write-Host "No Pkg server nodes found or no verGUID detected in Pkg config. Skipping."

}

if($qa.Count -gt 0 -and $qaGuid.Length -eq 36) {

foreach($server in $qa) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -ne $qaGuid) {

Write-Host ("Server {0} does not appear to be configured, adding to watchlist." -f $server)

$watch[$server] = $qaGuid

}else{

Write-Host ("Server {0} appears to be configured. Adding to completed list." -f $server)

$complete[$server] = $true

}

}

}else{

Write-Host "No QA server nodes found or no verGUID detected in QA config. Skipping."

}

if($prod.Count -gt 0 -and $prodGuid.Length -eq 36) {

foreach($server in $prod) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -ne $prodGuid) {

Write-Host ("Server {0} does not appear to be configured, adding to watchlist." -f $server)

$watch[$server] = $prodGuid

}else{

Write-Host ("Server {0} appears to be configured. Adding to completed list." -f $server)

$complete[$server] = $true

}

}

}else{

Write-Host "No Production server nodes found or no verGUID detected in Production config. Skipping."

}

# Pause for meatbag digestion.

Start-Sleep -Seconds 10

# Monitor incomplete servers until all servers return matching verGUID's.

if($watch.Count -gt 0){ $monitor = $true }else{ $monitor = $false }

while($monitor -ne $false) {

$monitor = $false

$cleaner = @()

foreach($server in $watch.Keys) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -eq $watch[$server]) {

$complete[$server] = $true

$cleaner += $server

}else{

$monitor = $true

}

}

foreach($item in $cleaner){ $watch.Remove($item) }

Clear-Host

Write-Host "mConfigured Servers:`r`n"$complete.Keys

Write-Host "`r`n`r`nmIncomplete Servers:`r`n"$watch.Keys

if($monitor -eq $true){ Start-Sleep -Seconds 10 }

}

Clear-Host

Write-Host "Configured Servers:`r`n"$complete.Keys

Write-Host "`r`n`r`nIncomplete Servers:`r`n"$watch.Keys

End of the day is this a perfect solution? No. Bear in mind I just slapped this together to fill a void, things could be objectified, cleaned up, probably streamlined, but honestly a powershell script is not a good dashboard. I would also rather the servers themselves flag their progress in a centralized location rather than being pinged by a script.

But that is really something best implemented by the PowerShell devs, as anything 3rd party would, IMO, be rather ugly. So if all we have right now is ugly, I'll take ugly and fast.

As always, use at your own risk, I cannot imagine how you could eat a server with this script but don't go using it as some definitive health-metric. Just use it as a way to get a rough idea of the health of your latest configuration push.

Citrix: Presentation Server 4.5, list applications and groups.

Quick script to connect to a 4.5 farm and pull a list of applications and associate them to the groups that control access to them. You will need to do a few things before this works:

If you are running this remotely you need to be in the "Distributed COM Users" group (Server 2k3) and will need to setup DCOM for impersonation (you can do this by running "Component Services" drilling down to the "local computer", right click and choose properties, clicking General properties and the third option should be set to Impersonate).

Finally you will need View rights to the Farm. If you are doing this remotely there is a VERY strong chance of failure is the account you are LOGGED IN AS is not a "View Only" or higher admin in Citrix. RunAs seems to be incredibly hit or miss, mostly miss.

$start = Get-Date $farm = New-Object -ComObject MetaFrameCOM.MetaFrameFarm $farm.Initialize(1) $apps = New-Object 'object[,]' $farm.Applications.Count,2 $row = 0 [regex]$reg = "(?[^/]*)$" foreach($i in $farm.Applications) { $i.LoadData($true) [string]$groups = "" $clean = $reg.Match($i.DistinguishedName).Captures.Value $apps[$row,0] = $clean foreach($j in $i.Groups) { if($groups.Length -lt 1){ $groups = $j.GroupName }else{ $groups = $groups+","+$j.GroupName } } $apps[$row,1] = $groups $row++ } $excel = New-Object -ComObject Excel.Application $excel.Visible = $true $excel.DisplayAlerts = $false $workbook = $excel.Workbooks.Add() $sheet = $workbook.Worksheets.Item(1) $sheet.Name = "Dashboard" $range = $sheet.Range("A1",("B"+$farm.Applications.Count)) $range.Value2 = $apps $(New-TimeSpan -Start $start -End (Get-Date)).TotalMinutes

App-V 5.0: PowerShell VE launcher.

Quick little script to enable you to launch local apps into a VE. Can be run of two ways:

Prompts the user for an App-V app and then the local executable to launch into the VE.

Accepts command line arguments to launch the specified exe into the specified VE.

Import-Module AppvClient

if($args.Count -ne 2) {

$action = Read-Host "App-V app to launch into (type 'list' for a list of apps):"

while($action -eq "list") {

$apps = Get-AppvClientPackage

foreach($i in $apps){ $i.Name }

$action = Read-Host "App-V app to launch into (type 'list' for a list of apps):"

}

try {

$apps = Get-AppvClientPackage $action

}

catch {

Write-Host ("Failed to get App-V package with the following error: "+$_)

}

$strCmd = Read-Host "Local app to launch into VE:"

try {

Start-AppvVirtualProcess -AppvClientObject $app -FilePath $strCmd

}

catch {

Write-Host ("Failed to launch VE with following error: "+$_)

}

}else{

$app = Get-AppvClientPackage $args[0]

Start-AppvVirtualProcess -AppvClientObject $app -FilePath $args[1]

}

Usage:

- Prompt-mode: AppV-Launcher.ps1

- CMDLine Mode: AppV-Launcher.ps1 TortoiseHg C:\Windows\Notepad.exe

Note: The arguments are positional, so it must be Virtual App then Local Executable in that order otherwise it will fail. There is no try/catch on the CMDLine mode as it expects you to know what you are doing (and want as much information about what went wrong as possible) and there is no risk of damage.

PowerShell: Date Picker.

Quick function to prompt a user to select a date. Usage is pretty straighforward.

$var = $(DatePicker "<title>").ToShortDateString()

function DatePicker($title) {

[void] [System.Reflection.Assembly]::LoadWithPartialName("System.Windows.Forms")

[void] [System.Reflection.Assembly]::LoadWithPartialName("System.Drawing")

$global:date = $null

$form = New-Object Windows.Forms.Form

$form.Size = New-Object Drawing.Size(233,190)

$form.StartPosition = "CenterScreen"

$form.KeyPreview = $true

$form.FormBorderStyle = "FixedSingle"

$form.Text = $title

$calendar = New-Object System.Windows.Forms.MonthCalendar

$calendar.ShowTodayCircle = $false

$calendar.MaxSelectionCount = 1

$form.Controls.Add($calendar)

$form.TopMost = $true

$form.add_KeyDown({

if($_.KeyCode -eq "Escape") {

$global:date = $false

$form.Close()

}

})

$calendar.add_DateSelected({

$global:date = $calendar.SelectionStart

$form.Close()

})

[void]$form.add_Shown($form.Activate())

[void]$form.ShowDialog()

return $global:date

}

Write-Host (DatePicker "Start Date")

Citrix: Creating Reports.

A bit of a different gear here, but here are a couple examples, one using Citrix 4.5 (Resource Manager) andone using Citrix 6.0 (EdgeSight).

$start = Get-Date

Import-Module ActiveDirectory

function SQL-Connect($server, $port, $db, $userName, $passWord, $query) {

$conn = New-Object System.Data.SqlClient.SqlConnection

$ctimeout = 30

$qtimeout = 120

$constring = "Server={0},{5};Database={1};Integrated Security=False;User ID={2};Password={3};Connect Timeout={4}" -f $server,$db,$userName,$passWord,$ctimeout,$port

$conn.ConnectionString = $constring

$conn.Open()

$cmd = New-Object System.Data.SqlClient.SqlCommand($query, $conn)

$cmd.CommandTimeout = $qtimeout

$ds = New-Object System.Data.DataSet

$da = New-Object System.Data.SqlClient.SqlDataAdapter($cmd)

$da.fill($ds)

$conn.Close()

return $ds

}

function Graph-Iterate($arList,$varRow,$varCol,$strPass) {

Write-Host $arList[$i].depName

foreach($i in $arList.Keys) {

if($arList[$i].duration -ne 0) {

if($arList[$i].depName.Length -gt 1) {

$varRow--

if($arList[$i].depName -eq $null){ $arList[$i].depName = "UNKNOWN" }

$sheet.Cells.Item($varRow,$varCol) = $arList[$i].depName

$varRow++

$sheet.Cells.Item($varRow,$varCol) = ("{0:N1}" -f $arList[$i].duration)

$varCol++

if($master -ne $true){ Iterate $arList[$i] $strPass }

}

}

}

return $varcol

}

function Iterate($arSub, $strCom) {

$indSheet = $workbook.Worksheets.Add()

$sheetName = ("{0}-{1}" -f $strCom,$arSub.depName)

Write-Host $sheetName

$nVar = 1

if($sheetName -eq "CSI-OPP MAX")

{

Write-Host "The Var is:"

Write-Host $nVar

$sheetName = "{0} {1}" -f $sheetName,$nVar

$nVar++

}

$strip = [System.Text.RegularExpressions.Regex]::Replace($sheetName,"[^1-9a-zA-Z_-]"," ");

if($strip.Length -gt 31) { $ln = 31 }else{ $ln = $strip.Length }

$indSheet.Name = $strip.Substring(0, $ln)

$count = $arSub.Keys.Count

$array = New-Object 'object[,]' $count,2

$arRow = 0

foreach($y in $arSub.Keys) {

if($y -ne "depName" -and $y -ne "duration" -and $y.Length -gt 1) {

$t = 0

$array[$arRow,$t] = $y

$t++

$array[$arRow,$t] = $arSub[$y]

$arRow++

}

}

$rng = $indSheet.Range("A1",("B"+$count))

$rng.Value2 = $array

}

function Create-Graph($lSheet,$lTop,$lLeft,$range, $number, $master, $catRange) {

# Add graph to Dashboard and configure.

$chart = $lSheet.Shapes.AddChart().Chart

$chartNum = ("Chart {0}" -f $cvar3)

$sheet.Shapes.Item($chartNum).Placement = 3

$sheet.Shapes.Item($chartNum).Top = $top

$sheet.Shapes.Item($chartNum).Left = $left

if($master -eq $true) {

$sheet.Shapes.Item($chartNum).Height = 500

$sheet.Shapes.Item($chartNum).Width = 1220

}else{

$sheet.Shapes.Item($chartNum).Height = 325

$sheet.Shapes.Item($chartNum).Width = 400

}

$chart.ChartType = 69

$chart.SetSourceData($range)

$chart.SeriesCollection(1).XValues = $catRange

}

$port = "<port>"

$server = "<sqlserver>"

$db = "<db>"

$user = "<db_user>"

$password = "<pass>"

$query = "SELECT p.prid, p.account_name, p.domain_name, p.dtfirst, cs.instid, cs.sessid, cs.login_elapsed, cs.dtlast, cs.session_type, s.logon_time, s.logoff_time

FROM dbo.principal AS p INNER JOIN

dbo.session AS s ON s.prid = p.prid INNER JOIN

dbo.ctrx_session AS cs ON cs.sessid = s.sessid"

#WHERE p.account_name LIKE 'a[_]%'

$userlist = SQL-Connect $server $port $db $user $password $query

$users = @{}

foreach($i in $userlist.Tables) {

if($i.account_name -notlike "h_*" -and $i.account_name -notlike "a_*" -and $i.account_name -ne "UNKNOWN" -and ([string]$i.logon_time).Length -gt 1 -and ([string]$i.logoff_time).Length -gt 1) {

try {

$info = Get-ADUser -Identity $i.account_name -Properties DepartmentNumber, Department, Company

}

catch {

$info = @{"Company"="Terminated";"Department"="Invalid";"DepartmentNumber"="0000"}

}

if($info.Company.Length -lt 2) {

$info = @{"Company"="Terminated";"Department"="Invalid";"DepartmentNumber"="0000"}

}

if($users.Contains($info.Company) -eq $false) {

$users[$info.Company] = @{}

$users[$info.Company]['duration'] = (New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}else{

$users[$info.Company]['duration'] = $users[$info.Company]['duration']+(New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}

if($users[$info.Company].Contains(([string]$info.DepartmentNumber)) -eq $false) {

$users[$info.Company][([string]$info.DepartmentNumber)] = @{}

$users[$info.Company][([string]$info.DepartmentNumber)]['depName'] = $info.Department

$users[$info.Company][([string]$info.DepartmentNumber)]['duration'] = (New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}else{

$users[$info.Company][([string]$info.DepartmentNumber)]['duration'] = $users[$info.Company][([string]$info.DepartmentNumber)]['duration']+(New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}

if($users[$info.Company][([string]$info.DepartmentNumber)].Contains($i.account_name) -eq $false) {

$users[$info.Company][([string]$info.DepartmentNumber)][$i.account_name] = (New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}else{

$users[$info.Company][([string]$info.DepartmentNumber)][$i.account_name] = $users[$info.Company][([string]$info.DepartmentNumber)][$i.account_name]+(New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}

}elseif($i.account_name -ne "UNKNOWN" -and ([string]$i.logon_time).Length -gt 1 -and ([string]$i.logoff_time).Length -gt 1) {

if($i.account_name -like "a_*") {

$info = @{"Company"="Administrators";"Department"="Elevated IDs (A)";"DepartmentNumber"="1111"}

}else{

$info = @{"Company"="Administrators";"Department"="Elevated IDs (H)";"DepartmentNumber"="2222"}

}

if($users.Contains("Administrators") -eq $false) {

$users['Administrators'] = @{}

$users['Administrators']['duration'] = (New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}else{

$users['Administrators']['duration'] = $users['Administrators']['duration']+(New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}

if($users['Administrators'].Contains($info.DepartmentNumber) -eq $false) {

$users['Administrators'][$info.DepartmentNumber] = @{}

$users['Administrators'][$info.DepartmentNumber]['depName'] = $info.Department

$users['Administrators'][$info.DepartmentNumber]['duration'] = (New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}else{

$users['Administrators'][$info.DepartmentNumber]['duration'] = $users['Administrators'][$info.DepartmentNumber]['duration']+(New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}

if($users['Administrators'][$info.DepartmentNumber].Contains($i.account_name) -eq $false) {

$users['Administrators'][$info.DepartmentNumber][$i.account_name] = (New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}else{

$users['Administrators'][$info.DepartmentNumber][$i.account_name] = $users['Administrators'][$info.DepartmentNumber][$i.account_name]+(New-TimeSpan $i.logon_time $i.logoff_time).TotalHours

}

}else{

if(([string]$i.logon_time).Length -lt 1 -and $i.account_name -ne "UNKNOWN"){ "No logon time: "+$i.account_name }

if(([string]$i.logoff_time).Length -lt 1 -and $i.account_name -ne "UNKNOWN"){ "No logoff time: "+$i.account_name }

}

}

# Create Excel object, setup spreadsheet, name main page.

$excel = New-Object -ComObject excel.application

$excel.Visible = $true

$excel.DisplayAlerts = $false

$workbook = $excel.Workbooks.Add()

$row = 1

$col = 1

$sheet = $workbook.Worksheets.Item(1)

$sheet.Name = "Dashboard"

# Populate tracking vars.

# $row is the starting row to begin entering data into text cells.

# $cvar tracks $left position, resets when it reaches 3.

# $cvar3 tracks $top position, after every third graph it increments +340.

$row = 202

$col = 2

$cvar = 1

$cvar3 = 1

$top = 10

$left = 10

# Iterate through main element (Companies), $z returns company name (MGTS, MR, etc.).

$min = ($sheet.Cells.Item(($row)-1,1).Address()).Replace("$", "")

$tmin = ($sheet.Cells.Item(($row)-1,2).Address()).Replace("$", "")

foreach($q in $users.Keys) {

$sheet.Cells.Item($row,1) = "Maritz Total Citrix Usage (by hours)"

$row--

if($q -eq "114"){ $q = "Training IDs" }

$sheet.Cells.Item($row,$col) = $q

$row++

$sheet.Cells.Item($row,$col) = ("{0:N1}" -f $users[$q].duration)

$col++

}

$max = ($sheet.Cells.Item($row,($col)-1).Address()).Replace("$", "")

$range = $sheet.Range($min,$max)

$range2 = $sheet.Range($tmin,$max)

Create-Graph $sheet $top $left $range $cvar3 $true $range2

$row++;$row++

$col = 2

$top = ($top)+510

$cvar3++

foreach($z in $users.Keys) {

if($z.Length -gt 1 -and $z -ne "112 MAS"){

# Setup chart location vars.

if($cvar -eq 1) {

$left = 10

}elseif($cvar -eq 2){

$left = 420

}elseif($cvar -eq 3) {

$left = 830

}

$col = 2

$sheet.Cells.Item($row,1) = $z

# Track chart range minimum cell address.

$min = ($sheet.Cells.Item(($row)-1,1).Address()).Replace("$", "")

$tmin = ($sheet.Cells.Item(($row)-1,2).Address()).Replace("$", "")

# Iterate through secondary element (Departments), $i returns department name.

# Graph-Iterate Here

$vLoc = Graph-Iterate $users[$z] $row $col $z

# Track chart range maximum cell address.

$max = ($sheet.Cells.Item($row,($vLoc)-1).Address()).Replace("$", "")

$range = $sheet.Range($min,$max)

$range2 = $sheet.Range($tmin,$max)

Create-Graph $sheet $top $left $range $cvar3 $false $range2

$row++;$row++

# Increment or reset tracking vars.

if($cvar -eq 3) {

$top = ($top)+340

}

if($cvar -eq 1 -or $cvar -eq 2){ $cvar++ }elseif($cvar -eq 3){ $cvar = 1}

$cvar3++

}

}

# Show dashboard page rather than some random department.

$sheet.Activate()

New-TimeSpan -Start $start -End (Get-Date)

PowerShell: Persistent Environment Variables.

This comes up pretty often and if you haven't dealt with env. variables much in scripting (or even batch files) you may be confused as to why your variables don't stick around. For example:

$Env:SFT_SOFTGRIDSERVER = "appserver"

Perfectly valid, but only for that PowerShell session. Not sure why but they make you use .Net to set it permanently, and, while syntactically more complex, it isn't difficult.

[Environment]::SetEnvironmentVariable("SFT_SOFTGRIDSERVER", "appvserver", "Machine")

Likewise you can get EV's:

[Environment]::GetEnvironmentVariable("SFT_SOFTGRIDSERVER", "Machine")

It is important to note that once you set it with .Net you wont see it in $Env until you start a new session, you HAVE to retrieve it via .Net in order to see it in the same session. If you really need some functionality of $Env you can add it both ways, but I honestly can't think of a good reason you would need to do that. But it wont harm anything.

App-V 5.0: Add publishing server.

Simple PS script to add a publishing server.

Import-Module AppVClient Add-AppvPublishingServer -Name <name> -Url http://<hostname>:<port>

MUCH better so far microsoft, MUCH better. Now it just needs to be reliable (something you can't really judge with a beta).

Killing A Process Safely...

Anyone who has ever done any real scripting in Windows knows there are about a million different ways to kill a process.

However, be it VBScript or PowerShell there is only really one "safe" way (not including heavily complicated .Net calls in PS):

taskkill /t /pid <pid>

A couple of quick details. /t tell it to "treekill", or in other words kill all child processes as well (which is pretty crucial when killing virtual apps as their child processes hold the virtual environment open).

/pid is pretty self explanatory, you can find a processes PID pretty easily:

$proc = Get-Process -ProcessName WINWORD$proc.ID

taskkill /t /pid 344

App-V: Scripting Multi-Line Batch Files.

Adding a script to an App-V package is all fine and good (even though it doesn't support anything beyond batch file era scripting languages) but if your script consists of multiple lines and you are adding it after the fact (especially for testing purposes) you may very well find it likes to butcher the script, condensing it all down to one long line.

The solution is very simple.

\r\n

Put that at the end of each line. It is the equivalent of a carriage return (enter...ish), and a new-line.

Is this a bit duct-tapey? Why yes, yes it is. But I suppose pointlessly "enhancing" the UI is a lot more important than making a sophisticated product, so get used to duct taping App-V together.

PowerShell: App-V Suite-In-Place

A powershell script to suite two applications in the local OSD cache, for testing interaction and hotfixing a user if need be. At some point I may update it to accept arguments.

$offcGuid = "22C76228-3DF0-48DD-853C-77FDC955CC86"

$sPath = "RTSPS://%SFT_SOFTGRIDSERVER%:322/Power Pivot for Excel.sft"

$sGuid = "F5B20FA7-E437-4E03-885B-3D5B67F3DC22"

$sParam = ""

$sFile = "%CSIDL_PROGRAM_FILES%\Microsoft Analysis Services\AS Excel Client\10\Microsoft.AnalysisServices.AtomLauncher.exe"

$sGuard = "POWPIVOT.1\osguard.cp"

$sSize = "323267785"

$sMand = "FALSE"

$path = "C:\ProgramData\Microsoft\Application Virtualization Client\SoftGrid Client\OSD Cache\"

$dir = Get-ChildItem $path

$list = $dir | where {$_.extension -eq ".osd"}

foreach ($i in $list)

{

[xml] $xml = gc ($path + $i)

if($xml.SOFTPKG.IMPLEMENTATION.CODEBASE.GUID -eq $offcGuid)

{

$final = ($path + $i)

[xml] $excel = gc $final

$newitem = $excel.CreateElement("CODEBASE")

$newitem.SetAttribute("HREF", $sPath)

$newitem.SetAttribute("GUID", $sGuid)

$newitem.SetAttribute("PARAMETERS", $sParam)

$newitem.SetAttribute("FILENAME", $sFile)

$newitem.SetAttribute("SYSGUARDFILE", $sGuard)

$newitem.SetAttribute("SIZE", $sSize)

$newitem.SetAttribute("MANDATORY", $sMand)

$excel.SOFTPKG.IMPLEMENTATION.VIRTUALENV.DEPENDENCIES.AppendChild($newitem)

$excel.Save($final)

}

}

PowerShell: Find and kill a process.

PS Kill:

Stop-Process -processname notepad.exe

PS List:

Get-Process * | sort ProcessName

Initial Response...

Retrieving and setting Environment variables in Powershell.

Get:

.\ Get-ChildItem Env:

$Env:Temp

Set:

Process Level:

$Env:TEMP = "C:\Windows\Temp"

User/Machine Level:

[Environment]::SetEnvironmentVariable("Temp", "Test value.", "User")

Environment]::SetEnvironmentVariable("Temp", "Test value.", "Machine")

Delete:

[Environment]::SetEnvironmentVariable("Temp", $null, "User")